Troubleshooting Network Director

Troubleshooting resources and tips for Network Director.

On this page:

- Connection Errors Returned for Configured Context Paths

- Unable to Access Policy Manager Container

- Virtual Service Client Cannot Connect to Physical Service

- Cannot Connect to Physical Service that Requires WS-Security Headers

- Error message re blocking timeout 10000 ms

- Change the Authorization HTTP Header to Case Insensitive Header

- Debugging the SSL handshake and Jetty internal errors

- Logging of TLS errors and the corresponding alert code

- Set cache expiration property

- Network Director performance issues after upgrade

Connection Errors Returned for Configured Context Paths

A number of different issues might cause a connection error to be returned from the Network Director. For example:

- There might be a 404 error on loading the WSDL for a SOAP service. If you use the browser to access the WSDL using {endpoint}?wsdl, and get a 404 error rather than the WSDL file, the first step in troubleshooting is to check that the virtual service is providing a WSDL. For instructions, see To check that the Network Director can retrieve the virtual service WSDL later in this section.

- For a SOAP or REST service, you might send a request to the virtual service and receive a 404 in response.

If you are getting a 404 when invoking a virtual service, here are some things you can check:

- Check to see if the service is being hosted. To do this, enable the jetty URL to verify that all services/context paths are available. For instructions, see Check Context Paths Hosted on Network Director below).

- Make sure that the context path being sent by the consumer matches the context path for the virtual service being invoked.

- For a REST service:

- Verify that the context path being sent by the consumer matches the virtual service context path.

- Make sure the correct content type is being set in the HTTP header.

- If there is a payload, make sure the payload is consistent with the content-type.

- Check the usage logs to see if the physical service is responding with the 404. If it is the physical service responding with a 404, verify the physical service access point.

- Check if there is an issue with the firewall or load balancer that the consumer is sending the request through.

Check Context Paths Hosted on Network Director

If a 404 is returned to the consumer when trying to invoke a virtual service, check the context paths hosted on the Network Director.

Solution:

Possible reasons for this issue are:

- The service is not deployed on the Network Director. See To check whether the service is deployed to the Network Director below.

- A 404 was returned from the physical service (physical service is down). See Virtual Service Cannot Connect to Physical Services below.

To check whether the service is deployed to the Network Director

Check the contexts hosted on the Network Director.

There is a URL you can use to list all the context paths hosted on the Network Director. To view the context paths, you must first enable a configuration setting as described below.

To check context paths from the Network Director

- Log in to the Akana Administration Console for the Network Director instance at http://{nd_host}:{nd_port}/admin/console.html.

- Click the Configuration tab.

- In the Configuration Categories section on the left, choose com.soa.platform.jetty.

- Set the value of the jetty.information.servlet.enable property to true.

- Click Apply Changes.

- Check the URL to get the list of context paths on the Network Director and see if your service is listed:

http://{nd_host}:{nd_port}/admin/com.soa.transport.jetty/information

Virtual Service Cannot Connect to Physical Service

If the virtual service cannot connect to the next-hop service or physical service, two possible causes are:

- The physical service is down.

- The wrong access point has been configured for the physical service.

Solution:

To help determine where the issue lies, first send a request to the endpoint you listed under the physical service access point. Verify that the response was received. Depending on the results:

- If the physical service is down, contact the physical service provider.

- If the direct request was successful, and there is a firewall between the virtual service and the physical service, check in case there's an issue with either of the following:

- Firewall

- Proxy server

Unable to Access Policy Manager Container

In some cases it has happened that consumers were unable to connect to the Policy Manager services, with no error when loading bundles, and with the log showing the following entry:

ERROR - Attempting to invoke method refresh on com.soa.container.configuration.service.ContainerConfigPollingService.

One possible cause of this issue is that the maximum number of threads for the HTTP listener is set to 0 and must be increased.

Solution:

As a workaround, you can edit the system.properties file to create a temporary HTTPS listener entry. This will allow you to log in and increase the number of threads on the HTTP listener. You must then restore the original system.properties file.

To increase the maximum number of threads for the HTTP listener

- Go to the ./sm60/instances/{pm_instance} folder on your machine and find the system.properties file. Save a backup copy of the original file.

- In the file, find the org.osgi.service.http.port property and set it to 9900 (or your original port).

- Modify the property name and value as shown below:

org.osgi.service.http.port.secure: set it to 9943 (or some other port)

Note: This change creates a temporary HTTPS listener so that you can log in and update the thread count for the HTTP listener.

- Restart the container.

- Go to https://host:9943/ (or applicable port number) and log in to the Policy Manager console.

- Change the default listener pool parameters to valid values. For example:

- min thread=5

- max thread=200

- Restore the original system.properties file.

- Restart the container.

Virtual Service Client Cannot Connect to Physical Service

If the client application cannot connect to the physical service, check the following:

- If it isn't a SOAP service, go to Hosted Service Issues below to check if the service is running.

- If it is a SOAP service, check that the virtual service is providing a WSDL. See To check that the Network Director can retrieve the virtual service WSDL below.

- Depending on the results, do one of the following:

- If there is an error retrieving the WSDL, there is an issue with hosting the virtual service. Go to the Hosted Service Issues section below.

- If there was no error with the WSDL, the issue is at the policy/contract level. For additional troubleshooting steps, see To check policy configuration below. If it is an issue with the policy or contract there should be an error in the alerts or the monitoring data for the service.

To check that the Network Director can retrieve the virtual service WSDL

- Open a browser.

- Enter the URL of the virtual service:

{nd_host}:{nd_port}/{service_context_path} - Append ?wsdl to the end of the URL.

The WSDL file for the virtual service should be displayed in the browser. Proceed depending on what you see:

- If the WSDL file is not displayed, there is an issue with the hosted service. For additional troubleshooting steps, go to the next section, Hosted Service Issues, below.

- If the WDSL file is displayed, this means that the service is hosted correctly; therefore, the physical service address must be wrong, or there must be an issue with the physical service. Check the access point in the Policy Manager user interface to make sure it is correct. One possible scenario is that the physical endpoint changed after setup. You can make sure you can successfully call the physical service in the same way you did for the virtual service in Step 2, using the URL of the physical service as set up in Policy Manager container.

Hosted Service Issues

If you cannot connect to the service, and you were not able to retrieve the WSDL file, follow the steps below.

To resolve issues with hosting the virtual service

- Verify the access point being used. To do this:

- In Policy Manager, from the organization tree, select the virtual service.

- In the right pane, click the Access Points tab.

- Verify that the access point is still listed for that service. If it isn't listed, go to the Network Director container, Hosted Services tab, and click Host Virtual Service. Select the virtual service to host on the Network Director container or cluster.

- Next, verify the status of the container. To do this:

- In Policy Manager, from the organization tree, select the container that hosts the virtual service.

- In the right pane, click the Details tab.

- Check the status of the container. The status should display as Started, with a green icon.

- Take additional steps depending on the container status and settings:

- If the container has not started, check that the bundles have loaded.

- Stopped or Unresponsive: In Network Director, go to the Network Director Container Detail Page for the container and view the status indicator for the container. There are three statuses: Started, Stopped, and Unresponsive. The status is communicated from Network Director to Policy Manager every 15 seconds using the Policy Manager service called Container State Service. If Policy Manager doesn't receive a response from Network Director in a certain amount of time, the status is reported as Unresponsive. If there is no response for an extended period of time the status is reported as Stopped. This indicates a communication problem between Policy Manager and Network Director.

- Check the Network Director log file and see if there are any issues connecting to Policy Manager.

- Check the Policy Manager log to see if there are any errors when the Network Director is connecting to Policy Manager to update its state.

- HTTPS address listed as service access point: Verify that the HTTPS listener has PKI keys and an X.509 certificate attached.

To check policy configuration

- Log in to the Policy Manager console.

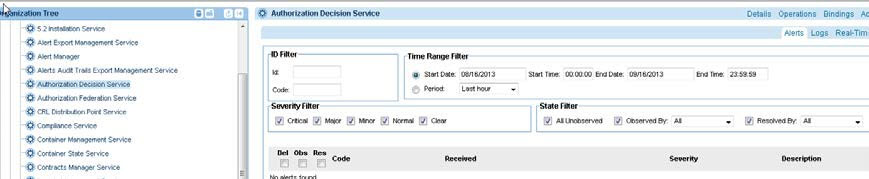

- Select the virtual service and then check for errors under Workbench > Monitoring > Alerts.

- Make sure:

- The consumer is included in the contract of the service.

- The contract is activated.

- There is only one contract active for the service.

Contract Not Configured

If an Authorization Failed message is returned when consuming a service, one possible cause is that the contract is not configured.

Solution:

To check whether the contract is configured correctly, and correct as needed, follow the steps below.

To configure a contract

- Log in to the Policy Manager console.

- From the organization tree, select the virtual service.

- Verify that the intended consumer is listed under the consumers portlet for the virtual service.

Note: If the intended consumer is not listed, you'll need to create another version of the contract. On the action panel to the right, click Start New Version. Under consumer identities, add the new consumer and then activate the new version of the contract. This will create a new version that includes the appropriate consumer.

- Verify that the approval status of the provided contract is Activated.

- If the contract is listed as Deactivated, activate it:

- From the Consumers portlet, select the contract.

- From the actions portlet, select Activate Contract.

- Wait for approximately one minute for the contract to go into effect.

Cannot Connect to Physical Service that Requires WS-Security Headers

If requests to a physical service that requires WS-Security headers fail, it might be because the WS- Security headers are being stripped out.

By default, the Network Director strips out the WS-Security headers before sending the request to the physical endpoint.

Solution:

If the WS-Security headers are required by the physical endpoint, you can set a property in the Akana Administration Console for the Network Director instance so that the headers are not stripped out.

To modify configuration to keep the WS-Security headers

- Log in to the Akana Administration Console for the Network Director instance (http:// {nd_host}:{nd_port}/admin).

- Click the Configuration tab.

- In the Categories portlet, select the com.soa.wssecurity category.

- Set the value of the keepsecurityHeader property to true.

- Click Apply Changes.

Error message re blocking timeout 10000 ms

In certain scenarios, such as where a large payload is being transmitted, the transmission time might exceed the default timeout value of 10000 milliseconds (10 seconds). The blocking timeout value is a security precaution to help prevent malicious DOS attacks such as SlowLoris attacks. For more information see https://en.wikipedia.org/wiki/Slowloris_(computer_security).

Solution:

In a scenario where blocking timeouts are occurring, you can increase the blocking timeout value to allow additional time, for example for upload of large payloads.

Increasing this value allows more time for a client to send data without timeout. However, be aware that with a slow client, this could tie up resources for longer. In addition, depending on the number of transactions, connections opened or threads handling these requests will be left longer until the transaction is done.

By default, the property that allows you to increase the blocking timeout value does not appear in the Akana Administration Console, but you can add it. The property is a Jetty server property, and support of this property is based on Jetty's support of it. For more information, refer to the Jetty documentation: https://archive.eclipse.org/jetty/9.4.2.v20170220/apidocs/org/eclipse/jetty/server/HttpConfiguration.html.

To increase the blocking timeout value

- Log in to the Akana Administration Console for the Network Director instance (http:// {nd_host}:{nd_port}/admin).

- Click the Configuration tab.

- Under Categories, find and select the com.soa.platform.jetty category.

- At the bottom of the page, click Add Property.

- Define the property as follows:

- Property Name: jetty.server.config.blockingTimeout

- Property Value: The default is 10000 milliseconds (10 seconds). Increase the value as needed; for example, 30000 for 30 seconds, 60000 for one minute, or 120000 for two minutes.

- Click Apply to add the new property, and then click Apply Changes to save the changes to the page.

- Restart the container.

Change the Authorization HTTP Header to Case Insensitive Header

The API request with “Authorization: Basic” fails while sending the key case for the authorization header as uppercase and not converted to lowercase.

Solution:

Update the following configuration in Network Director Admin Console:

com.soa.http.client.core ->

header.formatter.interceptor.templates -> Authorization:{authorization}

Debugging the SSL handshake and Jetty internal errors (also reported as SSL handshake errors)

To debug information about the SSL events that occur during an SSL handshake, check the stdout.log file, which contains detailed information about any failures that occurred when the container was started with the -Djavax.net.debug system property.

Use the following command-line properties when starting the container with the specified system property.

-Djavax.net.debug=ssl:handshake (only handshake messages are logged) -Djavax.net.debug=all (all messages are logged)

From version 2024.1.0, the startup.sh script will create a separate stderr.log file, in addition to the existing stdout.log file. This stderr.log file will capture only Jetty internal errors, which are sometimes reported as SSL Handshake errors. For example:

./startup.sh gw-1 -Djavax.net.debug=ssl:handshake -bg ./startup.sh gw-1 -Djavax.net.debug=all -bg

Logging of TLS errors and the corresponding alert code

The logging messages for API calls failing with TLS errors have been enhanced to improve troubleshooting issues with OAuth providers and API gateway traffic. These alerts now include more detailed information to identify connectivity issues for both inbound and outbound requests, such as:

- SSL handshake-related errors

- Peer certificate validation errors during the SSL handshake

The following table illustrates the various scenarios and their corresponding alert codes that arise during handshake failures.

| Scenario | Alert code | Description |

|---|---|---|

| Invoking the downstream service can result in a TLS mismatch (TLSv 1.3 vs TLSv 1.2), even when the client's certificate is present in the trust store. | 9032 | The handshake failed. Received fatal alert protocol_version. |

| 9033 | The connection could not be established due to an SSL exception, indicating either an SSL handshake error or an I/O error. | |

| Invoking the downsteam service can result in a TLS mismatch (TLSv 1.1 vs TLSv 1.2), even when the client's certificate is present in the trust store. | 9032 | The connection could not be established. Handshake failed because the remote host terminated the handshake. |

| 9033 | The connection could not be established due to an SSL exception, indicating either an SSL handshake error or an I/O error. | |

| If the client's certificate is not present in the trust store, the listener will be unable to verify the certificate, even if the necessary certificate is configured for the listener (No TLS mismatch). | 30226 | The certificate trust verification failed because the certificate is not trusted. |

| 30212 | The certificate cannot be trusted. | |

| The trust store contains an expired certificate, despite no TLS mismatch (upload a certificate that is nearing its expiration date) | 30226 | The certificate trust verification failed because the certificate is expired. |

Set cache expiration property

If you set a cache expiration value greater than 24 hours (for example, 90 days), the configuration may fail because the value exceeds the supported integer range. You must use the API to update the property and set the expiration value.

Example:

To set the cache expiry time property, use the following API:

curl --location --request PUT 'http://<pm_host>:<pm_port>/admin/config/com.soa.subsystems/trusted.ca.cert.keystore.spi.expireIntervalMillis' \

--header 'Content-Type: application/json' \

--header 'Authorization: <Basic Authentication>' \

--data '{

"name": "trusted.ca.cert.keystore.spi.expireIntervalMillis",

"value": "7776000000"

}'Network Director performance issues after upgrade

If you experience performance degradation in Akana versions later than 2024.1.4, it may be caused by frequent certificate retrieval requests between Network Director and Policy Manager.

Solution:

To improve performance, update the following configuration in the Network Director Admin Console:

com.soa.mp.core.client -> trusted.ca.cert.keystore.spi.expireIntervalMillis

Change the default value of 60000 ms (1 minute) to a longer interval or set it to -1.

This property determines how often the Network Director retrieves updated certificates from the Policy Manager trust store. Increasing the interval reduces the number of retrieval requests to Policy Manager, which helps improve overall performance.

Select an interval that aligns with how often trust store certificates are updated. If you set an interval longer than 20 days, use the Admin APIs.